OpenAI has announced another major AI milestone with the release of GPT-4, making significant improvements from GPT-3.5.

According to OpenAI, in collaboration with Microsoft Azure, over the last two years it has rebuilt its AI training track from the ground up and GPT-3.5 was the first test run of that new system. Since that release the company has found bugs and fixed them, and stated that the test run of GPT-4 was “unprecedentedly stable.”

In addition, the company has also applied lessons from its adversarial testing program and ChatGPT.

An example of the improvements is that GPT-4 passes a simulated bar exam with a score that is in the top 10% of those who took the test, while GPT-3.5 was in the bottom 10% of scores when it took the test.

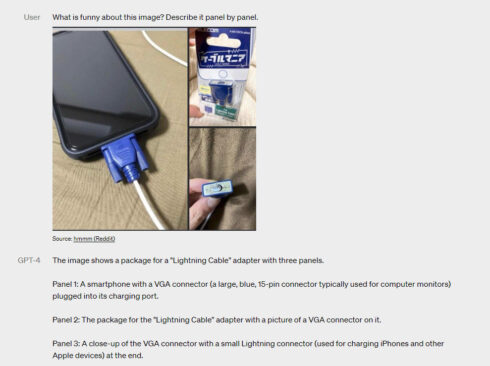

GPT-4 can accept images as well as text as input. An example OpenAI shared is a user giving a photo of a phone with a VGA cable plugged into it instead of a normal charging cable and asking what is funny with the photo.

The response: “A smartphone with a VGA connector (a large, blue, 15-pin connector typically used for computer monitors) plugged into its charging port … The humor in this image comes from the absurdity of plugging a large, outdated VGA connector into a small, modern smartphone charging port.”

While there have been some improvements over the previous model, OpenAI admits that there are still similar limitations with the model as there were in the past. For example it has the potential to give wrong facts or make reasoning errors.

However, there has been an improvement in the number of these “hallucinations” it has. GPT-4 scores 40% higher on evaluations for factuality than GPT-3.5 does.

Improvement also shows on the TruthfulQA benchmark, which tests a model’s ability to separate facts from a set of incorrect statements.

Another limitation is that its data training set ends in September 2021, which means it does not have information about recent events.

There have been improvements made in how it responds to harmful requests. A new safety reward signal was added to the training process to train the model to better refuse requests for harmful content while also lessening the chance it refuses a valid request. To do this, it collected a diverse dataset and applied the signal on both allowed and disallowed categories.

Compared to GPT-3.5, GPT-4 is 82% less likely to respond to requests for disallowed content, and responds to sensitive requests like medical advice in accordance with OpenAI policies 29% more often.

“GPT-4 and successor models have the potential to significantly influence society in both beneficial and harmful ways. We are collaborating with external researchers to improve how we understand and assess potential impacts, as well as to build evaluations for dangerous capabilities that may emerge in future systems. We will soon share more of our thinking on the potential social and economic impacts of GPT-4 and other AI systems,” OpenAI wrote in a blog post.

Subscribers of ChatGPT Plus can use GPT-4 through chat.openai.com, currently with a usage cap that OpenAI will continue to adjust based on demand. The company says that eventually it will also offer GPT-4 queries to users who don’t have a paid subscription.

In addition to this news, OpenAI also announced the open-sourcing of OpenAI Evals, which is a framework that automatically evaluates model performance.

The framework is used by OpenAI to guide model development, and now users can utilize it to track performance across models.

“We invite everyone to use Evals to test our models and submit the most interesting examples. We believe that Evals will be an integral part of the process for using and building on top of our models, and we welcome direct contributions, questions, and feedback,” OpenAI wrote.