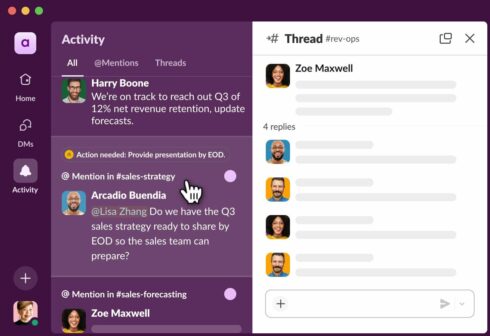

Slack’s AI search now works across an organization’s entire knowledge base

Slack is introducing a number of new AI-powered tools to make team collaboration easier and more intuitive.

“Today, 60% of organizations are using generative AI. But most still fall short of its productivity promise. We’re changing that by putting AI where work already happens — in your messages, your docs, your search — all designed to be intuitive, secure, and built for the way teams actually work,” Slack wrote in a blog post.

The new enterprise search capability will enable users to search not just in Slack, but any app that is connected to Slack. It can search across systems of record like Salesforce or Confluence, file repositories like Google Drive or OneDrive, developer tools like GitHub or Jira, and project management tools like Asana.

“Enterprise search is about turning fragmented information into actionable insights, helping you make quicker, more informed decisions, without leaving Slack,” the company explained.

The platform is also getting AI-generated channel recaps and thread summaries, helping users catch up on conversations quickly. It is introducing AI-powered translations as well to enable users to read and respond in their preferred language.

Anthropic’s Claude Code gets new analytics dashboard to provide insights into how teams are using AI tooling

Anthropic has announced the launch of a new analytics dashboard in Claude Code to give development teams insights into how they are using the tool.

It tracks metrics such as lines of code accepted, suggestion acceptance rate, total user activity over time, total spend over time, average daily spend for each user, and average daily lines of code accepted for each user.

These metrics can help organizations understand developer satisfaction with Claude Code suggestions, track code generation effectiveness, and identify opportunities for process improvements.

Mistral launches first voice model

Voxtral is an open weight model for speech understanding, that Mistral says offers “state-of-the-art accuracy and native semantic understanding in the open, at less than half the price of comparable APIs. This makes high-quality speech intelligence accessible and controllable at scale.”

It comes in two model sizes: a 24B version for production-scale applications and a 3B version for local deployments. Both sizes are available under the Apache 2.0 license and can be accessed via Mistral’s API.

JFrog releases MCP server

The MCP server will allow users to create and view projects and repositories, get detailed vulnerability information from JFrog, and review the components in use at an organization.

“The JFrog Platform delivers DevOps, Security, MLOps, and IoT services across your software supply chain. Our new MCP Server enhances its accessibility, making it even easier to integrate into your workflows and the daily work of developers,” JFrog wrote in a blog post.

JetBrains announces updates to its coding agent Junie

Junie is now fully integrated into GitHub, enabling asynchronous development with features such as the ability to delegate multiple tasks simultaneously, the ability to make quick fixes without opening the IDE, team collaboration directly in GitHub, and seamless switching between the IDE and GitHub. Junie on GitHub is currently in an early access program and only supports JVM and PHP.

JetBrains also added support for MCP to enable Junie to connect to external sources. Other new features include 30% faster task completion speed and support for remote development on macOS and Linux.

Gemini API gets first embedding model

These types of models generate embeddings for words, phrases, sentences, and code, to provide context-aware results that are more accurate than keyword-based approaches. “They efficiently retrieve relevant information from knowledge bases, represented by embeddings, which are then passed as additional context in the input prompt to language models, guiding it to generate more informed and accurate responses,” the Gemini docs say.

The embedding model in the Gemini API supports over 100 languages and a 2048 input token length. It will be offered via both free and paid tiers to enable developers to experiment with it for free and then scale up as needed.

Kong AI Gateway 3.11 introduces new method for reducing token costs

Kong has introduced the latest update to Kong AI Gateway, a solution for securing, governing, and controlling LLM consumption from popular third-party providers.

Kong AI Gateway 3.11 introduces a new plugin that reduces token costs, several new generative AI capabilities, and support for AWS Bedrock Guardrails.

The new prompt compression plugin that removes padding and redundant words or phrases. This approach preserves 80% of the intended semantic meaning of the prompt, but the removal of unnecessary words can lead to up to a 5x reduction in cost.

OutSystems launches Agent Workbench

Agent Workbench, now in early access, allows companies to create agents that have enterprise-grade security and controls.

Agents can integrate with custom AI models or third-party ones like Azure OpenAI or AWS Bedrock. It contains a unified data fabric for connecting to enterprise data sources, including existing OutSystems 11 data and actions, relational databases, data lakes, and knowledge retrieval systems like Azure AI Search.

It comes with built in monitoring features, error tracing, and guardrails, providing insights into how AI agents are behaving throughout their lifecycle.

Perforce launches Perfecto AI

Perfecto AI is a testing model within Perfecto’s mobile testing platform that can generate tests, and adapts in real-time to UI changes, failures, and changing user flows.

According to Perforce, Perfecto AI’s early testing has shown 50-70% efficiency gains in test creation, stabilization, and triage.

“With this release, you can create a test before any code is written—true Test-Driven Development (TDD)—contextual validation of dynamic content like charts and images, and triage failures in real time—without the legacy baggage of scripts and frameworks,” said Stephen Feloney, VP of product management at Perforce. “Unlike AI copilots that simply generate scripts tied to fragile frameworks, Perforce Intelligence eliminates scripts entirely and executes complete tests with zero upkeep—eliminating rework, review, and risk.”

Amazon launches spec-driven AI IDE, Kiro

Amazon is releasing a new AI IDE to rival platforms like Cursor or Windsurf. Kiro is an agentic editor that utilizes spec-driven development to combine “the flow of vibe coding” with “the clarity of specs.”

According to Amazon, developers use specs for planning and clarity, and they can benefit agents in the same way.

Specs in Kiro are artifacts that can be used whenever a feature needs to be thought through in-depth, to refactor work that requires upfront planning, or in situations when a developer wants to understand the behavior of a system.

Kiro also features hooks, which the company describes as event-driven automations that trigger an agent to execute a task in the background. According to Amazon, Kiro hooks are sort of like an experienced developer catching the things you’ve missed or completing boilerplate tasks as you work.

Akka introduces platform for distributed agentic AI

Akka, a company that provides solutions for building distributed applications, is introducing a new platform for scaling AI agents across distributed systems. Akka Agentic Platform consists of four integrated offerings: Akka Orchestration, Akka Agents, Akka Memory, and Akka Streaming.

Akka Orchestration allows developers to guide, moderate, and control multi-agent systems. It offers fault-tolerant execution, enabling agents to reliably complete their tasks even if there are crashes, delays, or infrastructure failures.

The second offering, Akka Agents, enables a design model and runtime for agentic systems, allowing creators to define how the agents gather context, reason, and act, while Akka handles everything else needed for them to run.

Akka Memory is durable, in-memory, sharded data that can be used to provide agents context, retain history, and personalize behavior. Data stays within an organization’s infrastructure, and is replicated, shared, and rebalanced across Akka clusters.

Finally, Akka Streaming offers continuous stream processing, aggregation, and augmentation of live data, metrics, audio, and video. Streams can be ingested from any source and they can stream between agents, Akka services, and external systems. Streamed inputs can trigger actions, update memory, or feed other Akka agents.

Clarifai announces MCP server hosting, OpenAI compatibility

Users will be able to upload and host their own tools, functions, and APIs as an MCP server that is fully hosted and managed by Clarifai.

The company is also introducing OpenAI-compatible APIs, which will allow users to integrate with more than 100 open-source and third-party models.

“Our MCP server hosting unleashes a new level of agent intelligence, allowing them to directly interact with an organization’s unique operational DNA and proprietary data sources. Paired with our OpenAI-compatible APIs, we’re not just accelerating deployment; we’re breaking down barriers, enabling developers to integrate these highly capable agents into their existing infrastructure almost instantly, driving rapid, impactful innovation,” said Artjom Shestajev, senior product manager at Clarifai.

Read last week’s updates here.