OpenAI has made a number of announcements today, including the release of two new embedding models, new GPT-4 Turbo and moderation models, and new API usage management tools.

According to OpenAI, “an embeddings is a sequence of numbers that represents the concepts within content such as natural language or code.” They make it easier for machine learning models to understand the relationships between content.

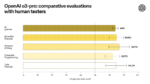

The two new embedding models are the text-embedding-3-small model and the text-embedding-3-large model. Text-embedding-3-small is a highly efficient model that offers improved performance over the previous model (multi-language retrieval benchmark increased from 31.4% to 44% and the English test benchmark increased from 61% to 62.3%). Pricing is also five times lower on this newer model.

The text-embedding-3-large model can create embeddings that have up to 3072 dimensions, and also offers improved performance when compared to the text-embedding-ada-002 model.

In addition to introducing these two new models, OpenAI has made improvements to embeddings in general. The new models were trained using a technique that allows developers to shorten embeddings by removing numbers from the end of the sequence without losing concept-representing properties. This enables more flexible use of these models, OpenAI explained.

Moving on to the other updates, GPT-3.5 Turbo and the Moderation API model were updated, along with the GPT-4 Turbo preview.

Available next week, the updated GPT-3.5 Turbo model has higher accuracy in responding in the requested format, and also will cost 50% less for input tokens and 25% less for output. This update also fixed a bug that was causing issues with text encoding for function calls not in English.

OpenAI has a Moderation API that is used to identify harmful text so that developers can take action. The company is releasing a new model for use with this API called text-moderation-007, which it says is “our most robust moderation model to-date.”

The GPT-4 Turbo preview has also been updated, with improvements such as more complete code generation and a reduction in the amount of times the model fails to properly complete a task.

And finally, the last major group of updates is around API management. Developers will now be able to assign permissions to API keys to provide finer access. The usage dashboard and usage export function also now expose metrics on the API key level if tracking is enabled.

“This makes it simple to view usage on a per feature, team, product, or project level, simply by having separate API keys for each,” OpenAI wrote in a blog post.