The Consortium for IT Software Quality (CISQ) has released two specifications to help organizations increase software quality.

Managed by the Object Management Group (OMG), CISQ was chartered in 2009 to create specifications for measuring source code quality that can be approved by the OMG.

“[The] industry needs standard, low-cost, automated measures for evaluating software size and structural quality that can be used in controlling the quality, cost and risk of software that is produced either internally or by third parties such as outsourcers,” said Bill Curtis, CISQ executive director and senior vice president and chief scientist at CAST Software.

“Structural quality represents the non-functional engineering aspects of software that are a major cause of so many of the system disasters that seem to be making headlines.”

The specifications are the CISQ Automated Function Point sizing standard (AFP), and the CISQ Software Quality Standard. They are aimed at standardizing measurements for size, automated function points, reliability, security, performance efficiency, and maintainability.

As Curtis explained it, these measures simplify outsourcing contracts since service-level agreements (SLAs) involving size and structural quality can be based on internationally accepted measures, rather than what he described as a quagmire of customers using different homegrown measures for quality attributes such as security or reliability.

“[The AFP specification] provides a cheaper and [more] consistent size measure compared to the subjectivity and expense of manual counting,” he said. “Industry and government badly need automation, [and] standardized metrics of software size and quality that are objective and computed directly from source code.”

Richard Soley, CEO of the OMG, added that the market is already seeing the effects of how a specification such as the AFP, while adhering to the guidelines of the International Function Point Users Group (IFPUG), can impact software quality.

“While keeping as closely as possible to the letter and spirit of IFPUG, AFP adds the important features of objectivity and automatability,” he said. “One person counting a codebase with AFP will always get the same number; two people the same. And it’s fully automated and standardized. As a way to measure, compare and follow the growing quality of a codebase, AFP is already changing the conversation around software sizing in the wild.”

Why do we need a software quality standard?

Software quality measurement is often in the eye of the beholder, and it can fall victim to the subjective perspective and values of whoever happens to be judging the code. CISQ’s Software Quality Standard, first published in 2012, is a way to take opinion out of the equation, which CISQ was founded to do, according to Soley.

“Both [Software Engineering Institute CEO] Paul Nielsen and I were approached to try to solve the twin problems of software builders and buyers: the need for consistent, standardized quality metrics to compare providers and measure development team quality, and to lower the cost of providing quality numbers for delivered software,” he said.

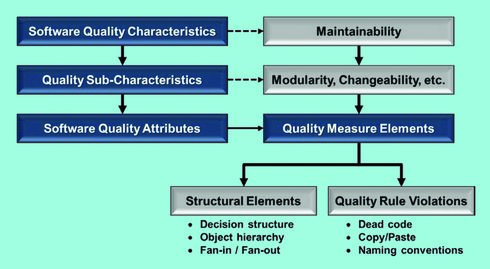

The standard lays out what measuring characteristics such as reliability, security, performance efficiency and maintainability entail. Curtis said it’s designed to develop common understanding between developers and enterprises of how software systems are analyzed, as well as how to detect architectural and coding flaws.

(Related: What kind of quality are you looking for?)

“Structural quality is measured by looking via pattern matching and related code analysis techniques for violations of good architectural and coding practice in the source code,” he said. “Code analysis technology is now capable of analyzing very large business applications whose various layers are written in different languages and involve various frameworks and technologies. For instance, modern analysis technology can automatically detect structural flaws not only within individual components, but also among components residing in several layers of the system that are part of a flawed interaction. We can detect an entry in the user interface that skips around both the user authentication functions in the next layer as well as the authorized data access routines, and accesses the data directly. This is both a security risk and a likely cause of data corruption.”

Using code analysis tools in accordance with the CISQ Software Quality Standard, Curtis explained how executives, application managers and developers could use these measures to identify which of the applications present the greatest risk to their business or involve the highest cost of ownership.

“Application managers can use these measures to track trends across releases in the structural quality characteristics of their systems,” Curtis said. “Developers will be able to discover multi-component, inter-layer weaknesses that are impossible to detect until they are integrated in a build, weaknesses that must take priority because of breadth of their potential damage. Developers can use the analysis output to identify and prioritize the riskiest or costliest flaws for correction. Finally, customers can use these measures to evaluate the quality of the software their system integrators and outsourcers are delivering.”

As for what to do once these software quality risks are uncovered, Curtis said that the minimum requirement for IT organizations is to set quality measurement thresholds for critical business systems, along with monitoring compliance with thresholds upon each delivery or software release. He also recommended removing unacceptable flaws in future editions.

Yet in order to manage application development and maintenance quantitatively (the way management uses numbers to run the rest of the business), Curtis said IT professionals should embrace the standard.

“We have entered the era of nine-digit defects—software flaws that cost the company over $100 million,” Curtis said. “Knight Trading, RBS, Target, to name just a few, have suffered extensive financial and reputational damage from software disasters. Structural flaws in business-critical systems and the level of risk to which they can expose the business have become boardroom issues. Executive management needs a way to evaluate software risk and cost, and they do not want proprietary measures. They want standards that help them govern and perform due diligence based on sound measures developed from industry experience that assess risk and cost.”

Adoption and software quality’s future

CISQ’s members and sponsor companies, including IT executives, system integrators and software technology vendors, are still working to improve the standard and are debating which software metrics and code quality weaknesses the standard should include, supplemented by the OMG’s specification revision process. At the moment, the OMG is still in the process of approving some aspects of the standard, but Curtis believed adoption of the standard will see a sharp rise.

“The CISQ Quality Characteristic Standards are still going through their approval process at OMG, so we are ahead of the adoption phase,” he said. “However, if the rapid adoption of CISQ’s standard for Automated Function Points is any indication, we expect many IT organizations will begin using the quality characteristic standards as SLAs in their outsourcing agreements very soon after the standards are approved by OMG. The standards were created because IT customers and suppliers both asked for them and participated in their development. Therefore, adoption will be driven by a pull rather than by a push.”

To make a specification like this work, a consortium such as the CISQ must not only put forth the effort to change long-established traditions in software quality, but it must also have the backing of a standards organization like the OMG to ensure an open process to foster implementation. OMG’s Soley explained how the two organizations work together toward the same goal.

“CISQ brings together, under an OMG and [Software Engineering Institute] umbrella, the best and the brightest in software artifact quality,” he said. “Then CISQ relies on the 25-year-old, open, neutral, international OMG process to ensure that open standards exist and implementations exist too. There’s no value to a standard with no implementation; OMG’s process ensures there is at least one, and usually more. This means CISQ standards get implemented and used, and are portable across toolsets and consultants. As CISQ continues to attack the metrics that matter to its member—starting with AFP, then security, then other quality metrics—we see the codebases of organizations improving.”

On a larger scale, CISQ has taken on the task of raising the structural quality of IT software. In the short term, Curtis said CISQ wants to raise awareness of the risk and cost of structurally weak software so that quality assurance addresses structural weaknesses with the same discipline and importance it addresses functional weaknesses. In the long-term, it’s about lowering the risk and cost of IT software to society, eliminating another barrier to enhancing the capabilities of computational systems.

“Measurement standards help by providing a foundation for focusing attention, setting quality targets, providing visibility, and tracking improvement progress,” Curtis said. “By using violations of good architectural and coding practice as the basis for measurement, we have seen teams learn from the results and avoid whole classes of weaknesses in future development. If the structural quality of software in business improves, we succeeded.”

Standards, standards everywhere

When it comes to specifications for standardizing the way software quality is measured, there are many ways to do it. The CISQ Software Quality Standard approaches it from a standpoint of assessing characteristics of extant code, but various specifications and standards from organizations like the ISO and IEC approach software quality from the development process, testing methods and looking at software from a product level.

Standards and specifications, such as the Capability Maturity Model Integration (CMMI), Test Maturity Model Integration (TMMI), the ISO/IEC 25000 series standards, and the ISO/IEC/IEEE 29119 software testing standard, all exist in similar, overlapping spaces. Curtis cleared up which ones compliment which, how the standards work together, and what makes the CISQ Software Quality Standard stand out.

CMMI: “CMMI is a process standard, and CMMI appraisals do not evaluate the quality of the software produced, so the CISQ standards are complementary to CMMI. In fact, CISQ measures could be used in a sophisticated appraisal to determine whether a purported high-maturity organization is producing the quality of software expected from high-maturity practices.”

OMG’s Soley added, “It was clear that while CMMI is important to understanding the software development process, it doesn’t provide feedback on the artifacts developed. Just as major manufacturers agree on specific processes with their supply chains, but also test parts as they enter the factory, software developers and acquirers should have consistent, standard metrics for software quality.”

ISO/IEC 25000 series: “The ISO/IEC 25000 series standards concern software and system product quality. ISO/IEC 25010 does a good job of defining eight quality characteristics conceptually,” said Curtis. “However, ISO/IEC 25023, the standard that defines actual software quality measures, does not do so at the internal, source-code level. Rather, it defines software quality characteristics in terms of the external behavior of the software. Thus, software quality cannot be measured and addressed until the software has been released to test or operations. The CISQ measures allow software quality to be assessed during development…thus, the CISQ measures supplement those in the ISO standard to allow a more complete life-cycle assessment of software quality.”

(Related: ISO 29119 and the software testing schism)

ISO 29119 and TMMI: “CISQ measures, based on known architectural and coding weaknesses, add a critical new dimension to the quality practices incorporated into testing process standards such as ISO/IEC/IEEE 29119 and the recently published TMMI,” said Curtis. “As was the case with CMMI, CISQ provides structural software product measures that are supplementary, since they can be used within any testing or quality assurance process that incorporates static analysis.”