Google is giving developers a way to add machine learning models to their mobile and embedded devices. The company announced the developer preview of TensorFlow Lite. The new solution is a lightweight version of TensorFlow, the open-source software library for machine intelligence.

“TensorFlow has always run on many platforms, from racks of servers to tiny IoT devices, but as the adoption of machine learning models has grown exponentially over the last few years, so has the need to deploy them on mobile and embedded devices. TensorFlow Lite enables low-latency inference of on-device machine learning models,” the TensorFlow team wrote in a post.

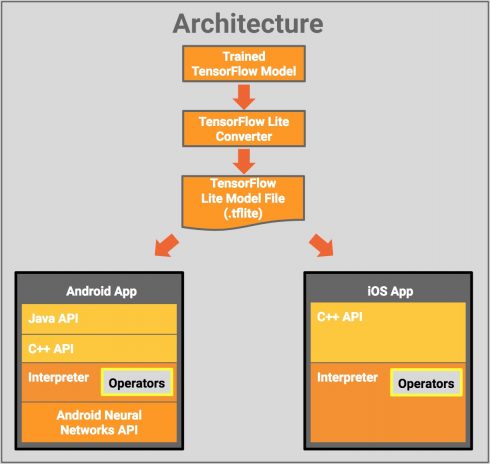

The developer preview includes a set of core operators for creating and running custom models, a new FlatBuffers-based model file format, an on-device interpreter with kernels, the TensorFlow converter, and pre-tested models. In addition, TensorFlow Lite supports the Android Neural Networks API, Java APIs and C++ APIs.

According to Google, developers should look at TensorFlow lite as an evolution of the TensorFlow Mobile API. TensorFlow Mobile API already supports mobile and embedded deployment of models. As TensorFlow Lite matures, it will become the recommended mobile solution. For now, TensorFlow Mobile will still support production apps.

“The scope of TensorFlow Lite is large and still under active development. With this developer preview, we have intentionally started with a constrained platform to ensure performance on some of the most important common models. We plan to prioritize future functional expansion based on the needs of our users. The goals for our continued development are to simplify the developer experience, and enable model deployment for a range of mobile and embedded devices,” the team wrote.