OpenAI has developed a new approach to improving artificial intelligence (AI) safety. The new technique is called iterated amplification, and it allows developers to measure the ability of a machine learning system to perform complex tasks.

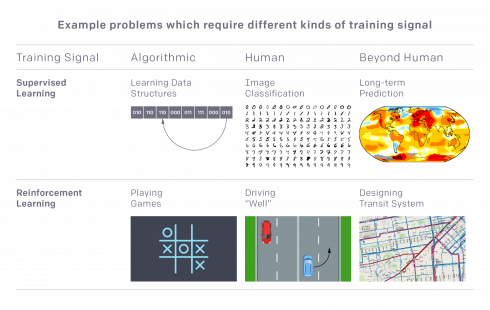

Currently, when machine learning systems are trained to perform a task researchers need a training signal that will evaluate how well the system is doing in order to help it learn. The goal can sometimes be evaluated algorithmically, but most real-world tasks cannot be evaluated in this way and their success cannot be judged by humans, such as designing a complex transit system or managing the security of a larger network of computers, according to OpenAI.

OpenAI explained iterated amplification can generate signals for those types of cases, under certain assumptions. Even when a human cannot perform or judge a whole task, the system assumes that they could identify smaller components of the task. It also assumes that humans can perform very small instances of the task.

For example, in the computer network situation, a human could break down tasks into “consider attacks on the servers,” “consider attacks on the routers,” and “consider how the previous two attacks might interact.” They could also perform the task of identifying whether or not a specific line in a log file is suspicious.

With those two things being true, a training signal can be created for big tasks made up of human training signals from small tasks.

“If the process works, the end result is a totally automated system that can solve highly composite tasks despite starting with no direct training signal for those tasks. This process is somewhat similar to expert iteration (the method used in AlphaGo Zero), except that expert iteration reinforces an existing training signal, while iterated amplification builds up a training signal from scratch. It also has features in common with several recent learning algorithms that use problem decomposition on-the-fly to solve a problem at test time, but differs in that it operates in settings where there is no prior training signal,” the OpenAI team wrote in a post.

The team stated that the idea is still in the very early stages of development and it has only experimented on simple toy algorithmic domains. It has decided to share it at this early stage in the hopes that it could prove to be a scalable approach to AI safety.