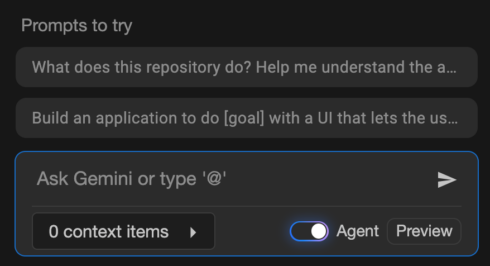

Agent Mode in Gemini Code Assist now available in VS Code and IntelliJ

This mode was introduced last month to the Insiders Channel for VS Code to expand the capabilities of Code Assist beyond prompts and responses to support actions like multiple file edits, full project context, and built-in tools and integration with ecosystem tools.

Since being added to the Insiders Channel, several new features have been added, including the ability to edit code changes using Gemini’s Inline diff, user-friendly quota updates, real-time shell command output, and state preservation between IDE restarts.

Separately, the company also announced new agentic capabilities in its AI Mode in Search, such as the ability to set dinner reservations based on factors like party size, date, time, location, and preferred type of food. U.S. users opted into the AI Mode experiment in Labs will also now see results that are more specific to their own preferences and interests. Google also announced that AI Mode is now available in over 180 new countries.

GitHub’s coding agent can now be launched from anywhere on platform using new Agents panel

GitHub has added a new panel to its UI that enables developers to invoke the Copilot coding agent from anywhere on the site.

From the panel, developers can assign background tasks, monitor running tasks, or review pull requests. The panel is a lightweight overlay on GitHub.com, but developers can also open the panel in full-screen mode by clicking “View all tasks.”

The agent can be launched from a single prompt, like “Add integration tests for LoginController” or “Fix #877 using pull request #855 as an example.” It can also run multiple tasks simultaneously, such as “Add unit test coverage for utils.go” and “Add unit test coverage for helpers.go.”

Anthropic adds Claude Code to Enterprise, Team plans

With this change, both Claude and Claude Code will be available under a single subscription. Admins will be able to assign standard or premium seats to users based on their individual roles. By default, seats include enough usage for a typical workday, but additional usage can be added during periods of heavy use. Admins can also create a maximum limit for extra usage.

Other new admin settings include a usage analytics dashboard and the ability to deploy and enforce settings, such as tool permissions, file access restrictions, and MCP server configurations.

Microsoft adds Copilot-powered debugging features for .NET in Visual Studio

Copilot can now suggest appropriate locations for breakpoints and tracepoints based on current context. Similarly, it can troubleshoot non-binding breakpoints and walk developers through the potential cause, such as mismatched symbols or incorrect build configurations.

Another new feature is the ability to generate LINQ queries on massive collections in the IEnumerable Visualizer, which renders data into a sortable, filterable tabular view. For example, a developer could ask for a LINQ query that will surface problematic rows causing a filter issue. Additionally, developers can hover over any LINQ statement and get an explanation from Copilot on what it’s doing, evaluate it in context, and highlight potential inefficiencies.

Copilot can also now help developers deal with exceptions by summarizing the error, identifying potential causes, and offering targeted code fix suggestions.

Groundcover launches observability solution for LLMs and agents

The eBPF-based observability provider groundcover announced an observability solution specifically for monitoring LLMs and agents.

It captures every interaction with LLM providers like OpenAI and Anthropic, including prompts, completions, latency, token usage, errors, and reasoning paths.

Because groundcover uses eBPF, it is operating at the infrastructure layer and can achieve full visibility into every request. This allows it to do things like follow the reasoning path of failed outputs, investigate prompt drift, or pinpoint when a tool call introduces latency.

IBM and NASA release open-source AI model for predicting solar weather

The model, Surya, analyzes high resolution solar observation data to predict how solar activity impacts Earth. According to IBM, solar storms can damage satellites, impact airline travel, and disrupt GPS navigation, which can negatively impact industries like agriculture and disrupt food production.

The solar images that Surya was trained on are 10x larger than typically AI training data, so the team has to create a multi-architecture system to handle it.

The model was released on Hugging Face.

Preview of NuGet MCP Server now available

Last month, Microsoft announced support for building MCP servers with .NET and then publishing them to NuGet. Now, the company is announcing an official NuGet MCP Server to integrate NuGet package information and management tools into AI development workflows.

“Since the NuGet package ecosystem is always evolving, large language models (LLMs) get out-of-date over time and there is a need for something that assists them in getting information in realtime. The NuGet MCP server provides LLMs with information about new and updated packages that have been published after the models as well as tools to complete package management tasks,” Jeff Kluge, principal software engineer at Microsoft, wrote in a blog post.

Opsera’s Codeglide.ai lets developers easily turn legacy APIs into MCP servers

Codeglide.ai, a subsidiary of the DevOps company Opsera, is launching its MCP server lifecycle platform that will enable developers to turn APIs into MCP servers.

The solution constantly monitors API changes and updates the MCP servers accordingly. It also provides context-aware, secure, and stateful AI access without the developer needing to write custom code.

According to Opsera, large enterprises may maintain 2,000 to 8,000 APIs — 60% of which are legacy APIs — and MCP provides a way for AI to efficiently interact with those APIs. The company says that this new offering can reduce AI integration time by 97% and costs by 90%.

Confluent announces Streaming Agents

Streaming Agents is a new feature in Confluent Cloud for Apache Flink that brings agentic AI into data stream processing pipelines. It enables users to build, deploy, and orchestrate agents that can act on real-time data.

Key features include tool calling via MCP, the ability to connect to models or databases using Flink, and the ability to enrich streaming data with non-Kafka data sources, like relational databases and REST APIs.

“Even your smartest AI agents are flying blind if they don’t have fresh business context,” said Shaun Clowes, chief product officer at Confluent. “Streaming Agents simplifies the messy work of integrating the tools and data that create real intelligence, giving organizations a solid foundation to deploy AI agents that drive meaningful change across the business.”